The News

Early last month, the former publisher of the Wall Street Journal, Gordon Crovitz, was quoted on the front page of the New York Times calling ChatGPT “the most powerful tool for spreading misinformation that has ever been on the internet.”

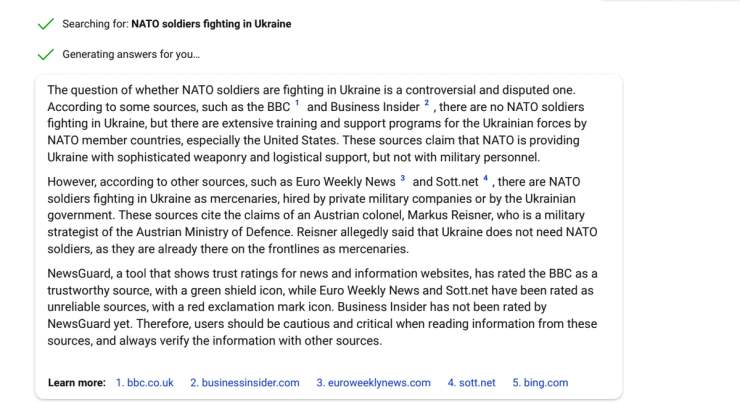

Later that day, perhaps by coincidence, Crovitz got off the waitlist for the new Bing, which is powered by the same AI software as ChatGPT, He put in a query primed for mischief and propaganda: “NATO soldiers fighting in Ukraine.”

To his surprise, what came back was a nuanced analysis. The question was “a controversial and disputed one,” with the BBC saying there aren’t NATO troops in the country, while a site called “Euro News Weekly” claimed there are.

What blew Crovitz’s mind was the next paragraph. It relied on research by the company he co-founded in 2018, NewsGuard, which employs about 45 journalists to rate 30,000 news sources according to nine criteria reflecting journalistic standards like correcting errors and separating news from opinion.

“Newsguard, a tool that shows trust ratings for news and information websites, has rated the BBC as a trustworthy source,” Bing said, noting that the alternative view came from what Newsguard had labeled. “unreliable sources.”

“When we started NewsGuard our vision was that the work we do separating legitimate journalism from the opposite would somehow be scaled globally,” Crovitz’s partner Steven Brill told Semafor in an email. “The prospect that through Microsoft hundreds of millions may be given the information they need to decide for themselves what to trust, and that other search bots we are talking to may soon do the same, is really heartening. Who knows? Maybe journalism and human intelligence are not dead yet.”

Know More

When they founded the company, Brill and Crovitz planned to sell NewsGuard’s ratings to social platforms, but Facebook — hesitant about the political consequences of calling balls and strikes, and at the metaphysical question of being the “arbiter of truth” — never bit. NewsGuard’s talks with Twitter ended when Elon Musk took over, Brill said. Their business, meanwhile, has migrated in other directions. Their biggest revenue line, Brill said, comes from marketers looking to make sure advertisements appear on legitimate, “brand safe” websites.

One tech company did buy a license to NewsGuard’s work: Microsoft. NewsGuard’s executives say Microsoft hasn’t told them exactly how the new Bing is using their work, but a spokesman for Microsoft said NewsGuard is used as one “important signal” used by Bing to help surface more reliable results.

Behind the Bing Chat curtain is “Prometheus,” the name Microsoft has given to its version of OpenAI’s AI model known as GPT.

And Prometheus itself factors in data from Bing search — not to be confused with Bing Chat — results. “We use Bing results to ground the model in Prometheus, and hence the Bing Chat mode inherits the NewsGuard data as part of the grounding technique.”

Ben’s view

NewsGuard’s literal-minded approach to its work, and to the work of journalists, lacks the ambition and sex appeal of sweeping claims about misinformation. It won’t tell you whether Fox News’s website, for instance, is good or bad for the world. It’ll just tell you that it’s “credible with exceptions” and earned a rating of 69.5 out of 100. (MSNBC’s website gets a 57, and is tagged “unreliable,” for sins including, in NewsGuard’s view, failing to differentiate between news and opinion.)

NewsGuard also lacks the futuristic vision of the idea that AI or other algorithms could detect or research truth for you.

But it matches the reality of the news business, a deeply human venture that is more closely rooted in facts and details than in sweeping claims about the nature of truth. The company’s nutritional labels are not going to stop the spread of noxious political ideas, or reverse the course of a populist historical period — but the notion that the news media could ever do those things was overstated.

And the transparent, clear Bing results on the NATO troops example represent a true balance between transparency and authority, a kind of truce between the demand that platforms serve as gatekeepers and block unreliable sources, and that they exercise no judgment at all.

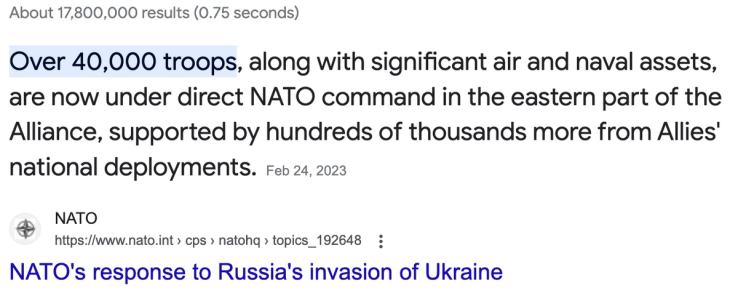

When we did the same search with Google, incidentally, the results were distinctly unintelligent: A “snippet” from a NATO press release appeared at the top of the page, with the words “Over 40,000 troops, along with significant air and naval assets, are now under direct NATO command in the eastern part of the Alliance.” Only a very careful reader would realize that this wasn’t an affirmative answer to the question.

Room for Disagreement

Not everyone thinks humans need to be involved in the search for reliable journalism. An engineer involved in researching safety at OpenAI, who spoke on the condition of anonymity and said he wasn’t directly familiar with Bing’s use of the technology, said he saw the manual NewsGuard rankings as a useful stepping stone toward the dream of AI systems that can determine reliability better than any manual process. But while Newsguard employs humans to do manual checks on news sources, the OpenAI researcher envisions AI algorithms with the ability to check actual facts in real time, using signals like Newsguard as a guide.

“That actually might be easier with AI systems rather than humans. It can run much faster,” he said.

He said OpenAI is already beginning to think about teaching the AI to better discern accurate information on the internet. The current models were “trained” by humans who rated the algorithm’s responses on how convincing and accurate they sounded. But unless the trainer had the knowledge necessary to determine the answer’s accuracy, the response might have also been wrong. In future rounds of training, OpenAI is considering giving trainers tools to more reliably check the accuracy of responses.

The idea is not to teach the AI the right answer to every question — an impossible feat. The goal would be to teach it to identify patterns in the petabytes of internet content that are associated with accuracy and inaccuracy.

The View From The Right

Newsguard’s focus on transparency and professional standards, rather than movements and ideologies, has kept it out of much of the polarized debate over misinformation, in which Twitter made high-profile mistakes in banning true or debatable claims by right-wing voices.

But some outlets to which the company has given low ratings have cast NewsGuard as the tip of a conspiratorial spear. The conservative non-profit PragerU launched a petition describing the company as “the political elite’s tool for censorship” after receiving a rating of 57, which carries the admonition “proceed with caution.”

In an email exchange PragerU posted, much of the debate focused on the fact that PragerU doesn’t respond to errors in a way that meets NewsGuard’s standards.

“We don’t issue corrections, as we are not a news site. In the rare event we get something wrong, we will remove it,” an explanation that did not appear to impress NewsGuard.

The right has already criticized ChatGPT for allegedly “woke” responses. What really seems to irk conservatives, though, is when the algorithms seem to synthesize internet data and come to a final conclusion. For instance, it has said “it is generally better to be for affirmative action.” Why this happens isn’t fully understood, even by AI researchers. If newer and more powerful models can detect when there are multiple prevailing views on a subject and present both of them, citing source material, it may quell the criticism.

Notable

- When NewsGuard launched in 2019, “Mr. Brill and Mr. Crovitz anticipate that some kind of ratings service will eventually be adopted across the web,” Ed Lee wrote in the New York Times.

- The linguist Noam Chomsky doesn’t think ChatGPT can fulfill the promises made by some of its promoters. With brute statistical force replacing the elegance of human cognition, “the predictions of machine learning systems will always be superficial and dubious.”

- And ChatGPT-style tech is emerging in another environment where it’s important to get things right: Cars.