Reed’s view

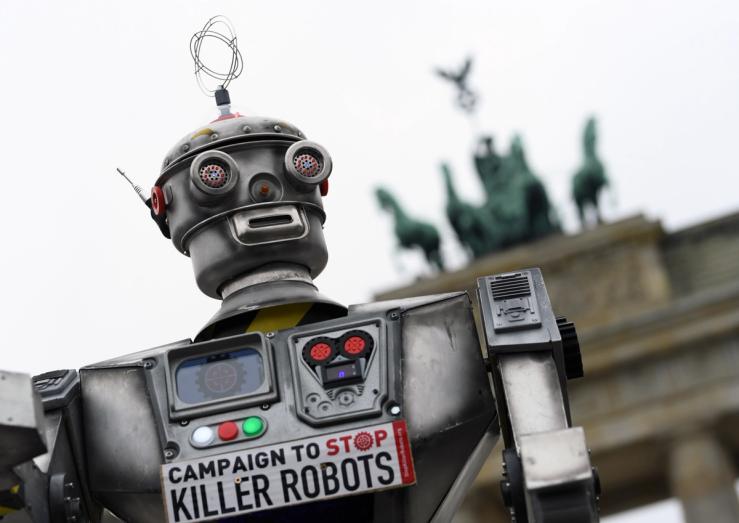

Is it time for society to start having a serious discussion about autonomous weapons? With Presidents Donald Trump and Xi Jinping set to meet on Thursday, and Taiwan sure to be a topic of discussion, it’s clear that the US and China are both investing serious resources into building drones, missiles, and other ballistics capable of finding, following, and destroying targets — and killing humans.

I’ve been thinking about this since talking to John Dulin, co-founder of defense tech startup Modern Intelligence, who persuaded me that this debate is stuck in the past.

I pointed out to Dulin that my Chinese-made robot vacuum cleaner has practically enough technology in it to be used in an autonomous weapon (though not a very good one). Militaries have gone much further. The remaining question is where we place the guardrails — and at what point we give software the power to kill.

Most people’s reflexive answer to that question is “never,” and conventional wisdoms is that killer systems require a “human in the loop” — an Air Force officer or civilian, in a building in Virginia or Kyiv, pushing the fatal button.

The problem is that autonomous weapons are more of a continuum. For instance: Drones can be flown by a human remotely and then switched to autonomous mode once a target is identified in order to avoid jamming technology.

Going from today’s level of autonomy to scenarios where the AI finds the target on its own is mainly a software challenge, and one that is being tackled for civilian use in the private sector.

The hypotheticals are complicated: What about attacks moving too fast for humans? What if an adversary breaks the connection between the human and weapon? It’s worth a public conversation on hard questions like the standard for accuracy. Deploying a weapon with 99.9999% chance of hitting the intended target is a lot different than 90%. Where do you draw the line?

In this article:

Room for Disagreement

In an opinion piece for IT publication CIO, cybersecurity expert Lee Barney wrote that there are more urgent threats to address than “killer robots.” Decreasing human connection, job replacement, and changing education priorities are all reshaping society — and forcing parents, businesses, and governments to change their ways.

Notable

- In order to protect civilians, the Pentagon should develop AI tools that fit its modus operandi, which is different from how the civilian world operates, the deputy assistant secretary of Defense for cyber policy during the Biden administration told Politico.