The News

As companies prepare to spend trillions on the AI race, it’s still not fully understood why the models do what they do. And without understanding these models at a deep level, they can’t be trusted to do anything where failure is not an option.

Lately, some intriguing research papers have tackled this problem — a reminder that there may still be some fundamental breakthroughs that could upend the industry.

One paper, published Tuesday by startup Pathway, makes some big claims about a new kind of transformer model that the authors say more closely mimics the way human brains actually work. (AI researchers have long been inspired by the brain, but the comparisons are often a bit of a stretch).

Know More

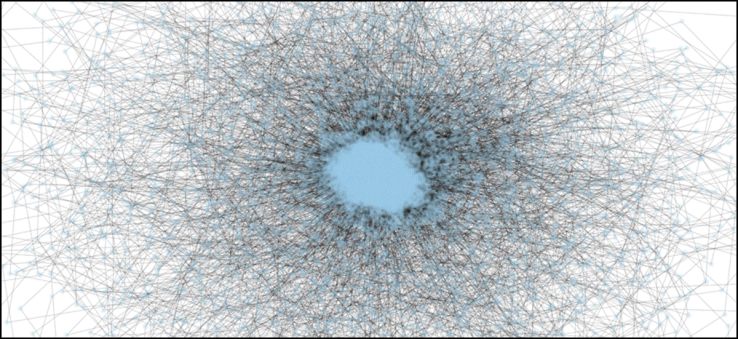

The challenge today is that chatbots, which tend to work as billions of neurons connected together in dense layers, start firing en masse when the LLM is working on a response. It appears like random order, so it’s extremely difficult to pick up on any patterns (some have tried, but none have cracked the code completely). Those artificial neurons never change, no matter how many times they are activated.

The neurons in our brains aren’t linked in the same way. Most of them are connected to smaller groups, and seem to fire more selectively and predictably when they are needed for a specific purpose. And, unlike the neurons in LLMs, ours are constantly changing. When two neurons fire at the same time, the synapses between them grow stronger.

Pathway’s experimental model, which it calls “Dragon Hatchling,” uses a variation of the transformer architecture that powers today’s LLMs, but with differences that attempt to mimic the brain. The neurons in Dragon Hatchling are simplified compared to traditional LLMs, and the company says the architecture allows it to see a direct cause-and-effect relationship between the neurons firing and the output of the model.

Pathway also says it has found a way to constantly update the connections between artificial neurons — what’s known in neuroscience as Hebbian learning. So, while the core part of Pathway’s model will stay fixed, like a traditional LLM, another part of it will change as you interact with it, allowing it to continuously learn.

It’s unclear whether Pathway is onto a real breakthrough, or just a fascinating hypothesis. The paper is a new way of combining a bunch of ideas that are already out there, but the method hasn’t yet been proven at scale. It’s possible that, as the model gets bigger, its theories won’t hold. Still, it might be the kind of academia-level idea that’s necessary to break through the current limitations of LLMs.