10 Minute Text

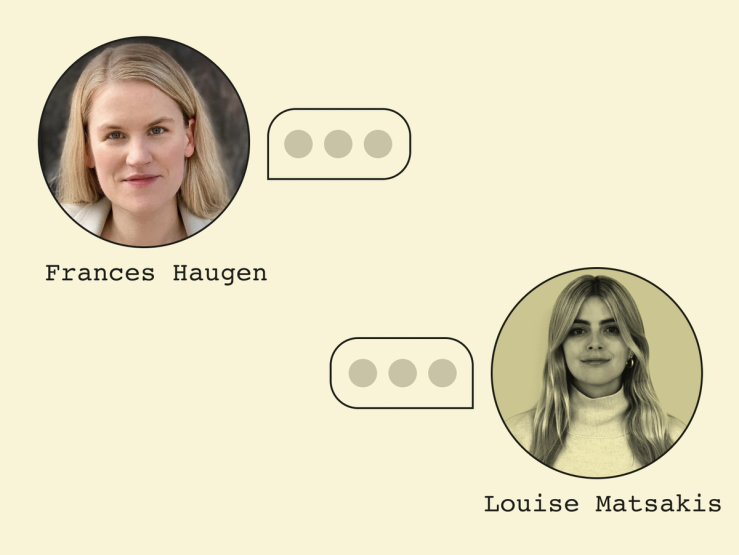

Facebook whistleblower Frances Haugen on what social media companies are still hiding

Former Facebook product manager Frances Haugen shared thousands of internal company documents with journalists and regulators in 2021, which she said proved the social network had prioritized its business over the wellbeing of its users.

Haugen texted with Semafor’s Louise Matsakis ahead of the publication of her , The Power of One: How I Found the Strength to Tell the Truth and Why I Blew the Whistle on Facebook.

Louise Matsakis said:

L: Hey Frances, how’s it going today? Where are you working from?

Frances Haugen said:

F: I’m in Atlanta visiting my husband’s family.

Frances Haugen said:

F: Things are good! Just got back from Vancouver.

Frances Haugen said:

F: And meeting with a variety of folks across British Columbia about tech transparency and accountability.

Louise Matsakis said:

L: That’s exciting, I hope the weather isn’t too sticky in Atlanta this Friday. Can you share a selfie of where you’re at?

Frances Haugen said:

Grabbing lunch in the car from the Whole Foods hot bar.

Frances Haugen said:

F: It’s the most consistent gluten free fast food option.

Louise Matsakis said:

L: Haha that is a very relatable classic lunch spot.

Louise Matsakis said:

L: So your book is about to come out, roughly two years after you became a whistleblower and started sharing internal Facebook documents with the world. In some ways, the social network has only become less politically and culturally relevant since then. Why should readers still care about how it behaves?

Frances Haugen said:

F: Facebook intentionally went into some of the most vulnerable places in the world and told people - “If you use our products, your data is free; if you use anything else you’ll have to pay for it yourself,” because they wanted to suppress competition from emerging.

Frances Haugen said:

F: As a result, today Facebook operates as the internet for billions of people - and that’s not ending any time soon, even if Facebook is uncool in the US.

Frances Haugen said:

F: Facebook and Instagram are still tentpoles of the Internet for tens of millions of people in the US.

Frances Haugen said:

F: We ignore that at our own risk, given how many trust and safety people Facebook/Meta fired this year.

Louise Matsakis said:

L: I think that’s a good point. I found one of the most disturbing revelations from the documents you shared was how Facebook operated in the developing world. Did you address any of those cases in the book?

Frances Haugen said:

F: Yes, I walked through why safety by content moderation (censorship) doesn’t scale linguistically, and almost by definition leaves behind the most vulnerable.

Frances Haugen said:

F: Because it’s not cost effective to rewrite safety systems into smaller languages.

Louise Matsakis said:

L: Right, there basically isn’t a feasible way for global platforms to address the nuances in every region or language.

Frances Haugen said:

F: Yes, which is why we need to design for risk up front.

Louise Matsakis said:

L: Can you expand on that more? What’s the alternative to “safety by content moderation,” as you put it?

Frances Haugen said:

F: There are many different strategies for reducing harmful content.

Frances Haugen said:

F: Things like adding time for reflection before sharing (ex requiring to click on a link before they reshare it) or prioritizing content you asked for vs content an algorithm thinks you want.

Frances Haugen said:

F: But none of them will be used by the platforms unless they have to report more information publicly than just their profit and loss numbers.

Louise Matsakis said:

L: We’ve had transparency reports for around a decade now, which most of the platforms voluntarily engage in, but you’re right that they don’t have to disclose specific information about how they enforce their rules. What kind of additional data would you like to see them be more transparent about?

Frances Haugen said:

F: The transparency reports are grossly misleading.

Frances Haugen said:

F: Because they get to choose what to report, how to define their metrics, etc they choose information that makes them look good.

Frances Haugen said:

F: Here’s an example:

Frances Haugen said:

F: Choosing to list the most viewed content as the content seen by the most people gives you very different results than the content _seen_ the most times.

Frances Haugen said:

F: Imagine a world where platforms had to report on how effective their systems were at finding children using their products.

Frances Haugen said:

F: Or how many kids were online after 10, 11, 12 o’clock at night? (Let alone after 1 am or 2 am).

Louise Matsakis said:

L: That’s a great example, it’s definitely true that you can use data to tell almost any story you want. Especially when reporting is a voluntary process.

Frances Haugen said:

F: Also, the most viewed content report only covers the United States.

Frances Haugen said:

F: Which is where they spend the most money on safety.

Frances Haugen said:

F: Laws like the Digital Services act will be transformative.

Frances Haugen said:

F: Because for the first time the public will have the right to ask questions and get answers.

Frances Haugen said:

F: Which amazingly, we have no right to today, even for basic questions like, how much money do you spend on keeping users in my country safe.

Frances Haugen said:

F: Any more questions? This has been more than ten min.

Louise Matsakis said:

L: Haha I’m sorry to keep you here, your responses have just been really interesting! I just have one last question and then I’ll let you get back to lunch: Are you worried about how generative AI will exacerbate the problems you’re talking about? Is this something you’re hearing about from lawmakers and other experts in this space?

Frances Haugen said:

F: Generative AI will pour gasoline on the worst problems of social platforms because it will make it possible to generate information operations that look like real human beings.

Frances Haugen said:

F: We need to demand social platforms to design for trust and safety up front.

Frances Haugen said:

F: And the only way we’ll get that is by making them accountable for cutting corners that impact public safety.

Louise Matsakis said:

L: Thank you so much for taking the time to do this Frances, and I am looking forward to your book!

Frances Haugen said:

F: Thanks!

Frances Haugen said:

F: TTYS