The Scene

Tech entrepreneur David Sacks, known more recently for his podcast and political views, just launched a new generative AI-powered work chat app to compete with Slack.

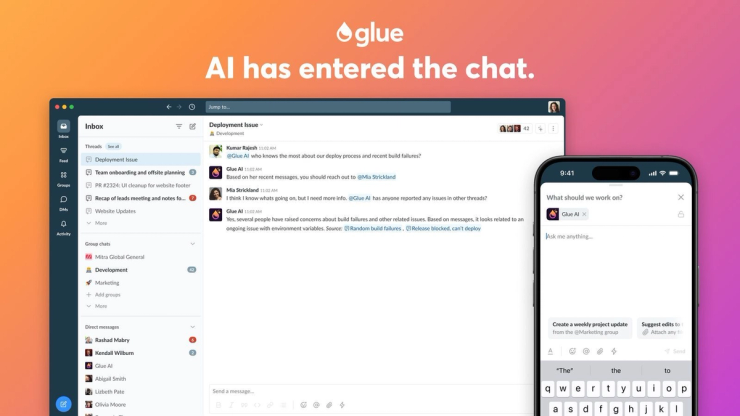

Co-founded with Evan Owen, formerly of data provider Clearbit, Glue offers a streamlined interface and costs $7 a month per user for an annual plan. They are pitching it as a souped-up version of Slack, but without the overwhelming number of channels. The service synthesizes data within Glue and other company data sources, and includes an all-knowing corporate AI assistant who’s always there.

Part of the “PayPal Mafia,” Sacks notes that people forget that he was the head of product at the payments firm, though he is one of the few entrepreneurs who is more than a one-hit wonder. After leaving PayPal, Sacks founded the enterprise social networking company Yammer, which was bought by Microsoft for $1.2 billion in 2012.

But these days, Sacks has become more of a media personality through All In, a technology podcast that became popular during and after the pandemic.

He has also become a political figure, throwing his money and influence behind Republican candidates, including an upcoming fundraiser for Donald Trump, a move that can make you a pariah in some corners of the tech industry.

While All In frequently veers into politics, its best moments have come when Sacks and others on the show share their hard-won insight from years in Silicon Valley’s trenches.

It turns out that Sacks isn’t done being an entrepreneur, and the potential for disruption being ushered in by AI is too much to resist. I spoke to him and Owen about channel fatigue, what AI can do for work chats, and if starting Glue will be as crazy as the early PayPal days.

The View From David Sacks and Evan Owen

Q: You are describing Glue as a better version of Slack, but what if Slack decides to copy you?

Sacks: From a data architecture standpoint, it would be very hard because one of the reasons why this works so magically is because there are no channels. They could copy us, but they’d have to completely revamp their app.

Owen: As soon as you become an enterprise company, your ability to innovate grinds to a halt just because your customers aren’t going to be okay with massive changes. As soon as you make changes as broad as what we’re doing, it’s a different product. I have connections inside Slack. Their product org is not taking this direction. You really need a new entrant who is rethinking how things are done.

Sacks: It would be smart for them to copy certain things. For example, letting the AI automatically give every thread a name and then allow you to @ mention the name. That would be something that’s discreet and maybe they could copy that. But here’s the problem: Let’s say it gets @ mentioned somewhere else and in some other channel, if people click that, they won’t be able to access the thread because they’re not in that channel. So again, the channels really get in the way. Unless they want to make really, really deep changes, it’s going tbe hard.

Q: Is it also the same answer for Microsoft?

Sacks: I think so. Their Teams product is basically like a turbocharged version of Yammer, which kind of got assimilated into Teams. It’s a good product, but it’s kind of like the fee-based paradigm circa 2010, 2012.

Q: It does seem like there’s still an opening, despite Microsoft taking the big enterprise lead and launching a bunch of products.

Sacks: When Microsoft talks about AI, they’re talking about models. The good thing is we don’t have to do them. We’ve plugged into three models already. We’ll add three more. It’s really about usability. It’s about optimizing how users actually interact with this. It’s about user experience. That’s not Microsoft’s historic strength.

Let’s say they roll out a pretty good model. It’s going to be one of six. Who cares? We just integrate with all of them. It’s really about how you think through all the usability details in order to get the most value out of it and how you integrate with all of your other apps. Microsoft Is also not that good at that because they’re usually thinking in terms of a proprietary stack. We’re thinking about like, well, we’re not going to do a calendar or we’re not going to do customer support, ticketing, whatever. So we have to think about how you integrate all that stuff from the beginning.

Q: Are you pulling in other apps as well? For instance, could you get Slack within Glue?

Owen: It’s currently a standalone. We are working on a Slack integration. We want to be able to interact with Slack Connect. That’s one thing a lot of people are using between companies.

But we really want to be the main communication tool for work. We think it can actually transform the way teams are communicating and help them be more productive. With Glue AI, you’ve actually got your AI assistant right there in your team chat. To make the most use of that, you have to have everybody there. So the other thing that we’re doing is making it really easy to just do a Slack import, pull all of your data from Slack into Glue and then you’ll immediately get the benefit of Glue AI across all that data in your new Glue workspace.

Sacks: One of the cool things about threads and having names is that you can @ mention them. And that creates a link.

Owen: So you can have all these sorts of backlinks, cross links, and very easily connect your conversations together in a way that makes sense. Whereas with a Slack thread, you can’t do it because a Slack thread doesn’t really have a handle.

Q: And can you make your threads private to just those people in the group?

Owen: If you add a group, it’s available to anyone in that group and nobody else. So you can have private groups with just two or three people and it’s sort of like a private channel.

Sacks: This is a real example. We use Glue at Craft Ventures. We’ve been using it for a long time. Erica Peterson happens to be our office and events manager. I did not tell the AI that. All I did was say, based on our conversations in Glue, what is Erica’s role and her responsibilities at Craft, and it figured it out. It found eight threads and synthesized them and says she serves as the events and office manager at Craft. That’s amazing.

Then it gives you all the sources, and these are all links to threads that were relevant for answering this question. So it’s a good example of how this is not something you could ever answer just by going to ChatGPT. This is something that AI is figuring out using your internal company data.

Q: Will the data all come from information that is in Glue?

Sacks: Not completely. We have the concept of apps. So you’ll be able to connect your apps and the AI will be able to access some of that data. A really big one is Google docs. And it can do attachments already.

Q: Is it using retrieval augmented generation [that uses specific data sources as the basis for responses to prompts]?

Owen: Yes, it’s using RAG but across multiple data sources. We have buckets and collections for different data sources securely indexed, and then we’re doing RAG at query time. But we do it in a way that not only analyzes your question, but also analyzes the context of where you’re asking it, like who’s on the thread, the subject of the thread, to figure out what is the best way to query to get the right information.

A: What AI models are you using?

Owen: Currently, we are actually model agnostic. I think we’re going to probably work with our own models in the future, but what we really want to show here is that the power is in the context. The models are continuously improving. Every provider is releasing a better model every month, practically. We want to be ready for that. You choose the model you want to use here. We’re going to have [Elon Musk’s] Grok in a little bit to have a faster, but maybe not quite as accurate option.

AI is moving so quickly, we want to be the hub where all of your data is coming together within your team communications, and have the best AI models to provide those answers.

Q: All the big companies are offering this through big enterprise customers. Are you going after smaller companies at first, like startups?

Sacks: Probably initially, but there’s no reason that we can’t get bigger companies to use it, too. That was a pattern at Yammer and Slack. You start with early adopters and then move up market.

But you’re right, these large enterprises are spending millions of dollars doing these big complicated enterprise AI projects. Corporates were spending millions of dollars building social intranets. Then Yammer came along and just spread like wildfire, with a $5-a-seat SaaS licenses. And nobody needed a multi-million-dollar corporate intranet anymore.

There’s going to be something similar here. The place that AI should live in the enterprise is in the chat. Because you don’t want it to go off to some separate ChatGPT-like app to do your chat, And then somehow bring that back to your Slack or whatever. You want to be in one place.

You want to make ChatGPT multiplayer and you want it to be connected to all of your enterprise content that’s already in your apps.

Q: Have you done a needle-in-the-haystack experiment where you put some piece of information into Glue and see if you could find it with AI?

Owen: It’s pretty good. As model context windows get larger, as RAG gets better, we’re going to be benefiting from that.

Q: You call it an agent. Are there things it can do that are agent-like?

Owen: That is the way we’re thinking of where this is going. We won’t have agent behavior at launch. But that is really what we believe is the future, that AI will be joining you as a teammate, an assistant, and helping you get work done.

Q: What are some of the things you’d like to see it do as the models get more capable?

Sacks: A big one is the context window getting bigger. Imagine running very complicated queries across data sources all over your organization. That’s where it’s all headed. We’ve asked Glue to compare two deals that we’re looking at. It does a pretty good job. It’s pretty amazing actually.

You can ask about company 1 and company 2, and each one already has its own thread. In that thread is a bunch of commentary, and it might have customer reference calls and a pitch deck. The AI takes all of that in and then like will give you a compare and contrast.

Q: What’s a good example of how this is going to leverage apps like Google Docs, for instance?

Sacks: In one case, somebody had created a thread called Meta earnings call. Somebody else had created a thread called Nvidia earnings call. And then inside of threads, they attached a transcript of the earnings call and the AI was able to read all of this, synthesize it, and then write this document summarizing three key themes. You can also ask what’s the difference between Meta’s and Nvidia’s AI strategies and it will give me an answer based on referencing these things. So if you think about Google Docs, it just means you won’t have to attach anything anymore. It’ll just grab all that stuff.

Q: Are there network effects here, where the more information you put into Glue, the better the AI will get?

Owen: Definitely. We see your communication as your living knowledge base. It’s the information that’s the most up to date, the most relevant. People are very lazy about moving things into official documents, but it’s all in your communication tool.

As models get faster and more affordable, we could actually preemptively look at the questions that are being asked and suggest to somebody: ‘Hey, it looks like you’re asking this question that four other people ask when they’re onboarding. Here’s the answer.’ Or, maybe you should add this person to this thread, because they’re an expert in this iOS problem you’re trying to debug. You can save so much time by having all that information together and being able to use it right when it’s needed.

Q: How do you segregate data so employees can’t access information they shouldn’t?

Sacks: Glue AI knows who’s on the recipients list and what their permissions are. One good thing about putting the AI in chat is 90-plus percent of the content in your chat app is open to the whole company. So you just tell the AI ‘don’t look at DMs unless the user is talking one on one, DMing the AI.’

Q: How do you improve the product and learn from the information in Glue if it’s private and segregated in that way?

Owen: It’s a good question. We will have to experiment and be careful. But one thing we want to start doing is learning about your company and your employees. What is it that you do as a company? Customer types, project types. What is each person good at? We can start building up this background information about you, of course within the secure walls of your workspace. Once we do that, we can tailor the responses to your specific use cases and conversations.

Q: Would this be as compelling if it weren’t for the AI component?

Sacks: Even before AI came along, people had channel fatigue from Slack and thought there has to be a better way. People really just need threads instead of channels. But then ChatGPT comes along and it literally does that. I don’t think they really thought about what their chat model was going to be. They just did what felt natural. And that was basically to create these topic-based threads with AI and there’s no channels. You can kind of see in our inbox model, it looks a lot like ChatGPT because it’s a similar type of paradigm.

So there would have been a reason to upgrade from chat before, but I think AI takes it to another level. Our discovery in doing this was that the topic-based threads model isn’t only better for AI conversations, it’s better for human conversations as well.

Owen: The reason that you need to have a contextual thread for AI is because it’s very sensitive to noise. If you have a lot of other information, it’s really hard for the AI to figure out what is relevant to the questions being asked. And honestly, people have the same problem. And sure, we can fight through it and figure it out. Or you could just make it easy and have a thread that’s on one topic. We actually started working on this before ChatGPT really took off. We really believe that that is the best way to communicate at work.

Q: David, you’re now a big media figure, a political figure. What made you want to roll up your sleeves and do this again?

Sacks: I’ve always had a passion for this product and I feel like there were things we just never had the chance to do at Yammer before we sold the company to Microsoft. Evan and I had that in common because he had worked on a previous chat company as well, Zinc, which he sold to ServiceMax. I feel like we both had kind of unfinished business

People forget that I was a product guy before becoming a podcaster, whatever you want to call me. Superstar? (laughs). I was the head of product at PayPal. I’ve always had an interest in this. Then AI came along and took it to another level. It creates a disruption in the market that’s big enough that you can come along with a new paradigm in chat and maybe disrupt the status quo.

If you were just coming along and your advantage was just usability, I think that’s hard, but AI is just shaking things up in a big enough way that you can see a new chat app emerging out of this.

Q: Will it be as harrowing as the PayPal days?

Sacks: I don’t think anything could be. It was in the midst of the dot-com crash. You know, Yammer was actually a really smooth experience. But look, I’m not on the front lines every day doing all the work. I’m co-founder, chairman. Evan is co-founder and CEO, which is great because I love being involved in the product and strategy. But the day-to-day operations, I’m very happy to have partners to run that.

It’s at the intersection of a lot of really interesting trends right now and a lot of interesting disruptions. We’ve seen AI at the consumer level. And the way it works is you use a chat app, whether it’s ChatGPT or Anthropic or Perplexity. We’re still trying to figure out how it fits into the enterprise. Where’s the main interface going to be? Our theory is that you should live obviously in your work chat. It sounds kind of obvious, but that’s not exactly the case.